(deprecated) Streaming API v1 Integration: Raw WebRTC Approach

v1 Raw WebRTC will not be supported after 2/28/25. We recommend transitioning to the Streaming Avatar SDK or using with theversion: v2(LiveKit).

This guide explains how to integrate the Streaming API using the V1 version, which uses raw WebRTC for maximum flexibility and compatibility. For users seeking easier implementation, HeyGen’s v2 API leverages LiveKit with pre-built tools, albeit at the cost of environment flexibility, please refer to the Streaming Avatar SDK section.

Implementation Guide

Here, we'll walk you through setting up WebRTC from scratch, enabling you to interact with HeyGen’s interactive avatars using raw WebRTC endpoints.

Prerequisites

- API Token from HeyGen.

- Basic understanding of WebRTC concepts (e.g., SDP, ICE candidates)

- Web server capable of serving static files and handling API requests

Key Endpoints

-

Create New Streaming Session

POST /v1/streaming.new: Initializes a new session and provides server SDP and ICE servers. -

Start Streaming Session

POST /v1/streaming.start: Begins the WebRTC session by exchanging SDP. -

Submit ICE Candidates

POST /v1/streaming.ice: Facilitates the exchange of ICE candidates to establish peer-to-peer connectivity. -

Send Custom Tasks

POST /v1/streaming.task: Send a text to an Interactive Avatar, prompting it to speak the provided text. -

Close Session

POST /v1/streaming.stop: Terminates an active streaming session.

Step 1. Basic Setup

Create an HTML file with necessary elements:

<div class="main">

<div class="controls">

<button id="startBtn">Start</button>

<button id="closeBtn">Close</button>

</div>

<div class="input-section">

<input id="taskInput" type="text" placeholder="Enter text"/>

<button id="talkBtn">Talk</button>

</div>

<video id="mediaElement" autoplay></video>

</div>

<script>

// JavaScript code goes here

</script>This provides the UI elements to control the session (start, close, send text) and display the avatar video.

Step 2. API Configuration

const API_CONFIG = {

apiKey: 'YOUR_API_KEY',

serverUrl: 'https://api.heygen.com'

};

// Global variables

let sessionInfo = null;

let peerConnection = null;

// DOM Elements

const mediaElement = document.getElementById("mediaElement");

const taskInput = document.getElementById("taskInput");Configuration considerations:

- Keep API keys secure and never expose in client-side code

- Use environment variables for production deployments

- Initialize WebRTC state variables in a clean scope

Step 3. Core WebRTC Implementation

3.1 Create New Session

This step establishes the initial WebRTC connection and prepares for media streaming:

async function createNewSession() {

// Step 1: Initialize streaming session

const response = await fetch(`${API_CONFIG.serverUrl}/v1/streaming.new`, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'X-Api-Key': API_CONFIG.apiKey,

},

body: JSON.stringify({

quality: 'high',

avatar_name: '',

voice: {

voice_id: '',

},

}),

});

const data = await response.json();

sessionInfo = data.data;

// Step 2: Setup WebRTC peer connection

const { sdp: serverSdp, ice_servers2: iceServers } = sessionInfo;

// Initialize with ICE servers for NAT traversal

peerConnection = new RTCPeerConnection({ iceServers });

// Step 3: Configure media handling

peerConnection.ontrack = (event) => {

// Attach incoming media stream to video element

if (event.track.kind === 'audio' || event.track.kind === 'video') {

mediaElement.srcObject = event.streams[0];

updateStatus('Received media stream');

}

};

// Step 4: Set server's SDP offer

await peerConnection.setRemoteDescription(new RTCSessionDescription(serverSdp));

}- The createNewSession function initializes the session and retrieves the server’s SDP (Session Description Protocol) and ICE (Interactive Connectivity Establishment) servers for WebRTC.

- It then sets up the WebRTC connection and listens for incoming media streams (audio and video).

3.2 Start Streaming Session

This step completes the WebRTC handshake and establishes the media connection:

async function startStreamingSession() {

// Step 1: Create client's SDP answer

const localDescription = await peerConnection.createAnswer();

await peerConnection.setLocalDescription(localDescription);

// Step 2: Handle ICE candidate gathering

peerConnection.onicecandidate = ({ candidate }) => {

if (candidate) {

// Send candidates to server for connection establishment

handleICE(sessionInfo.session_id, candidate.toJSON());

}

};

// Step 3: Monitor connection states

peerConnection.oniceconnectionstatechange = () => {

const state = peerConnection.iceConnectionState;

console.log(`ICE Connection State: ${state}`);

// Connection states and their meanings:

// - 'checking': Testing connection paths

// - 'connected': Media flowing

// - 'completed': All ICE candidates verified

// - 'failed': No working connection found

// - 'disconnected': Temporary connectivity issues

// - 'closed': Connection terminated

};

// Step 4: Complete connection setup

const startResponse = await fetch(`${API_CONFIG.serverUrl}/v1/streaming.start`, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'X-Api-Key': API_CONFIG.apiKey,

},

body: JSON.stringify({

session_id: sessionInfo.session_id,

sdp: localDescription, // Client's supported configuration

}),

});

}- This function generates the local SDP answer and sends it to the server.

- It also handles ICE candidates that are gathered by WebRTC and exchanges them with the server to establish a peer-to-peer connection.

- The ICE connection state is logged to monitor the connection’s progress.

3.3 Handle ICE candidates

async function handleICE(session_id, candidate) {

const response = await fetch(`${API_CONFIG.serverUrl}/v1/streaming.ice`, {

method: "POST",

headers: {

"Content-Type": "application/json",

"X-Api-Key": API_CONFIG.apiKey,

},

body: JSON.stringify({

session_id,

candidate,

}),

});

}

- ICE candidates are submitted to the server to help establish a connection between peers through NAT traversal.

3.4 Send Text to Avatar

To interact with the avatar, you can send text for it to speak:

async function sendText(text) {

await fetch(`${API_CONFIG.serverUrl}/v1/streaming.task`, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'X-Api-Key': API_CONFIG.apiKey

},

body: JSON.stringify({

session_id: sessionInfo.session_id,

text: text

})

});

}3.5 Close Session

To terminate the session and close the WebRTC connection:

async function closeSession() {

await fetch(`${API_CONFIG.serverUrl}/v1/streaming.stop`, {

method: "POST",

headers: {

"Content-Type": "application/json",

"X-Api-Key": API_CONFIG.apiKey,

},

body: JSON.stringify({

session_id: sessionInfo.session_id,

}),

});

if (peerConnection) {

peerConnection.close();

peerConnection = null;

}

sessionInfo = null;

mediaElement.srcObject = null;

}- This function stops the session by sending a request to the /v1/streaming.stop endpoint and then cleans up the WebRTC connection.

Step 4. Event Listeners

- Finally, attach event listeners to the buttons for controlling the session:

async function handleStart() {

await createNewSession();

await startStreamingSession();

}

document.querySelector("#startBtn").addEventListener("click", handleStart);

document.querySelector("#closeBtn").addEventListener("click", closeSession);

document.querySelector("#talkBtn").addEventListener("click", () => {

const text = taskInput.value.trim();

if (text) {

sendText(text);

taskInput.value = "";

}

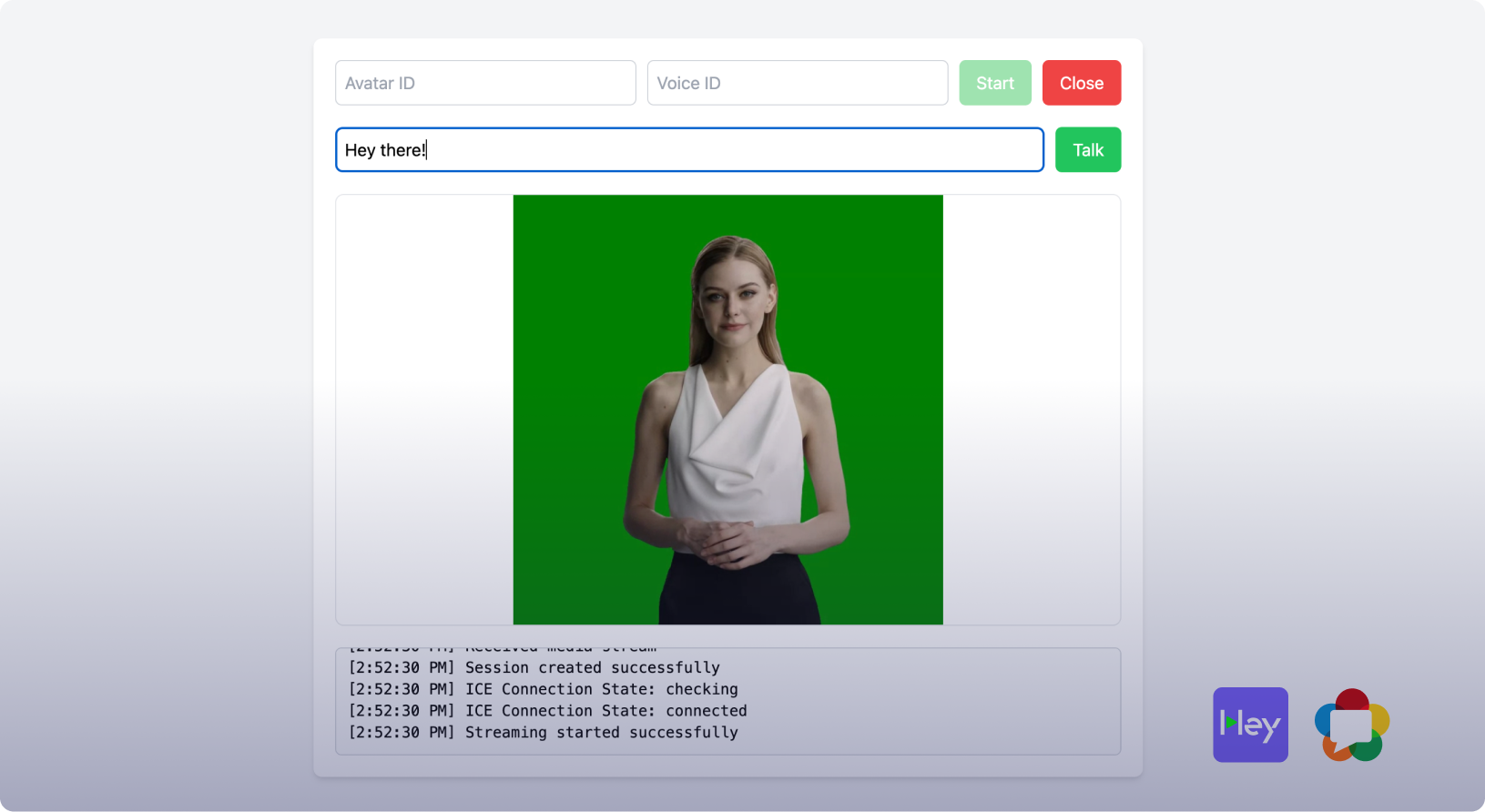

});Complete Demo Code

Here's a full working HTML & JS implementation combining all the components with a basic frontend:

<!doctype html>

<html lang="en">

<head>

<title>HeyGen Streaming API (V1 - WebRTC)</title>

<script src="https://cdn.tailwindcss.com"></script>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

</head>

<body class="bg-gray-100 p-5 font-sans">

<div class="max-w-3xl mx-auto bg-white p-5 rounded-lg shadow-md">

<div class="flex flex-wrap gap-2.5 mb-5">

<input

id="avatarID"

type="text"

placeholder="Avatar ID"

class="flex-1 min-w-[200px] p-2 border border-gray-300 rounded-md"

/>

<input

id="voiceID"

type="text"

placeholder="Voice ID"

class="flex-1 min-w-[200px] p-2 border border-gray-300 rounded-md"

/>

<button

id="startBtn"

class="px-4 py-2 bg-green-500 text-white rounded-md hover:bg-green-600 transition-colors disabled:opacity-50 disabled:cursor-not-allowed"

>

Start

</button>

<button

id="closeBtn"

class="px-4 py-2 bg-red-500 text-white rounded-md hover:bg-red-600 transition-colors"

>

Close

</button>

</div>

<div class="flex flex-wrap gap-2.5 mb-5">

<input

id="taskInput"

type="text"

placeholder="Enter text for avatar to speak"

class="flex-1 min-w-[200px] p-2 border border-gray-300 rounded-md"

/>

<button

id="talkBtn"

class="px-4 py-2 bg-green-500 text-white rounded-md hover:bg-green-600 transition-colors"

>

Talk

</button>

</div>

<video id="mediaElement" class="w-full max-h-[400px] border rounded-lg my-5" autoplay></video>

<div

id="status"

class="p-2.5 bg-gray-50 border border-gray-300 rounded-md h-[100px] overflow-y-auto font-mono text-sm"

></div>

</div>

<script>

// Configuration

const API_CONFIG = {

apiKey: 'your_api_key',

serverUrl: 'https://api.heygen.com',

};

// Global variables

let sessionInfo = null;

let peerConnection = null;

// DOM Elements

const statusElement = document.getElementById('status');

const mediaElement = document.getElementById('mediaElement');

const avatarID = document.getElementById('avatarID');

const voiceID = document.getElementById('voiceID');

const taskInput = document.getElementById('taskInput');

// Helper function to update status

function updateStatus(message) {

const timestamp = new Date().toLocaleTimeString();

statusElement.innerHTML += `[${timestamp}] ${message}<br>`;

statusElement.scrollTop = statusElement.scrollHeight;

}

// Create new session

async function createNewSession() {

const response = await fetch(`${API_CONFIG.serverUrl}/v1/streaming.new`, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'X-Api-Key': API_CONFIG.apiKey,

},

body: JSON.stringify({

quality: 'high',

avatar_name: avatarID.value,

voice: {

voice_id: voiceID.value,

},

}),

});

const data = await response.json();

sessionInfo = data.data;

// Setup WebRTC connection

const { sdp: serverSdp, ice_servers2: iceServers } = sessionInfo;

peerConnection = new RTCPeerConnection({ iceServers });

// Handle incoming media streams

peerConnection.ontrack = (event) => {

if (event.track.kind === 'audio' || event.track.kind === 'video') {

mediaElement.srcObject = event.streams[0];

updateStatus('Received media stream');

}

};

// Set remote description

await peerConnection.setRemoteDescription(new RTCSessionDescription(serverSdp));

updateStatus('Session created successfully');

}

// Start streaming session

async function startStreamingSession() {

// Create and set local description

const localDescription = await peerConnection.createAnswer();

await peerConnection.setLocalDescription(localDescription);

// Handle ICE candidates

peerConnection.onicecandidate = ({ candidate }) => {

if (candidate) {

handleICE(sessionInfo.session_id, candidate.toJSON());

}

};

// Monitor connection state changes

peerConnection.oniceconnectionstatechange = () => {

updateStatus(`ICE Connection State: ${peerConnection.iceConnectionState}`);

};

// Start streaming

const startResponse = await fetch(`${API_CONFIG.serverUrl}/v1/streaming.start`, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'X-Api-Key': API_CONFIG.apiKey,

},

body: JSON.stringify({

session_id: sessionInfo.session_id,

sdp: localDescription,

}),

});

updateStatus('Streaming started successfully');

document.querySelector('#startBtn').disabled = true;

}

// Handle start button click

async function handleStart() {

try {

await createNewSession();

await startStreamingSession();

} catch (error) {

console.error('Error starting session:', error);

updateStatus(`Error: ${error.message}`);

}

}

// Handle ICE candidates

async function handleICE(session_id, candidate) {

try {

const response = await fetch(`${API_CONFIG.serverUrl}/v1/streaming.ice`, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'X-Api-Key': API_CONFIG.apiKey,

},

body: JSON.stringify({

session_id,

candidate,

}),

});

if (!response.ok) {

throw new Error(`HTTP error! status: ${response.status}`);

}

} catch (error) {

console.error('ICE candidate error:', error);

}

}

// Send text to avatar

async function sendText(text) {

if (!sessionInfo) {

updateStatus('No active session');

return;

}

try {

const response = await fetch(`${API_CONFIG.serverUrl}/v1/streaming.task`, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'X-Api-Key': API_CONFIG.apiKey,

},

body: JSON.stringify({

session_id: sessionInfo.session_id,

text,

}),

});

if (!response.ok) {

throw new Error(`HTTP error! status: ${response.status}`);

}

updateStatus(`Sent text: ${text}`);

} catch (error) {

updateStatus(`Error sending text: ${error.message}`);

console.error('Send text error:', error);

}

}

// Close session

async function closeSession() {

if (!sessionInfo) {

return;

}

try {

const response = await fetch(`${API_CONFIG.serverUrl}/v1/streaming.stop`, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'X-Api-Key': API_CONFIG.apiKey,

},

body: JSON.stringify({

session_id: sessionInfo.session_id,

}),

});

if (!response.ok) {

throw new Error(`HTTP error! status: ${response.status}`);

}

if (peerConnection) {

peerConnection.close();

peerConnection = null;

}

sessionInfo = null;

mediaElement.srcObject = null;

document.querySelector('#startBtn').disabled = false;

updateStatus('Session closed');

} catch (error) {

updateStatus(`Error closing session: ${error.message}`);

console.error('Close session error:', error);

}

}

// Event Listeners

document.querySelector('#startBtn').addEventListener('click', handleStart);

document.querySelector('#closeBtn').addEventListener('click', closeSession);

document.querySelector('#talkBtn').addEventListener('click', () => {

const text = taskInput.value.trim();

if (text) {

sendText(text);

taskInput.value = '';

}

});

</script>

</body>

</html>Troubleshooting Guide

Common Issues and Solutions:

- Connection Failures: Ensure ICE servers are accessible and check if the firewall is blocking WebRTC traffic.

- Media Stream Issues: Ensure that both the client device and browser support required codecs and that bandwidth is sufficient.

- Performance Problems: Monitor network conditions, adjust quality settings, and track CPU usage.

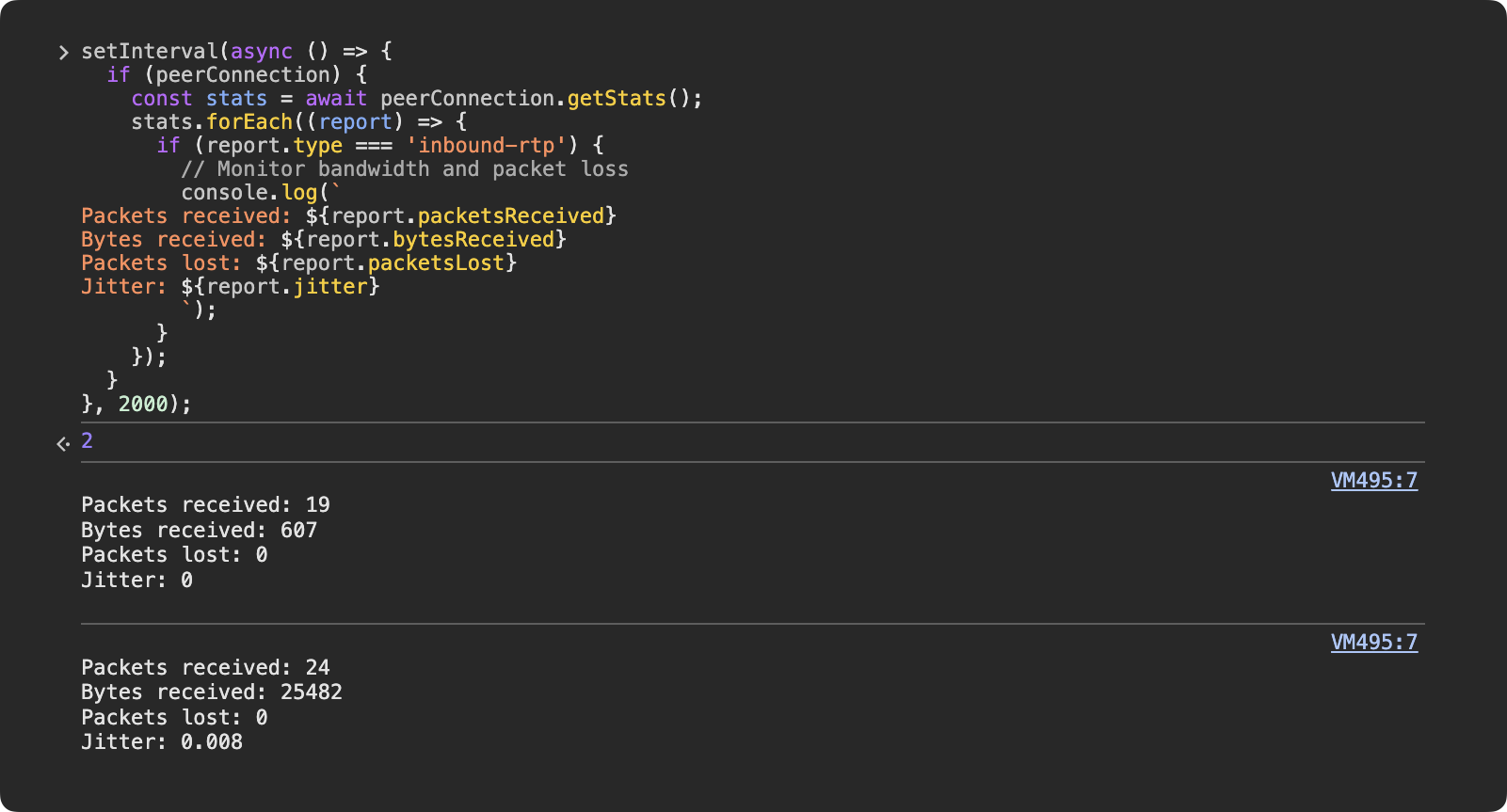

Debugging WebRTC Connection:

Use getStats to monitor WebRTC statistics.

setInterval(async () => {

if (peerConnection) {

const stats = await peerConnection.getStats();

stats.forEach((report) => {

if (report.type === 'inbound-rtp') {

// Monitor bandwidth and packet loss

console.log(`

Packets received: ${report.packetsReceived}

Bytes received: ${report.bytesReceived}

Packets lost: ${report.packetsLost}

Jitter: ${report.jitter}

`);

}

});

}

}, 2000);

Conclusion

Following the steps in this guide, you can successfully integrate HeyGen's streaming avatar feature into your application using raw WebRTC. This approach offers greater flexibility and control, particularly for custom configurations. If you encounter any issues, the HeyGen support team is available to assist you.

Updated 24 days ago