Discussions

Interactive Avatar streaming , voice chat

Hi, i have a problems during attempt to integrate interactive avatar.

There are my expectations about how that process should work, very simple version

- Initialize avatar

- Ask GPT about opening phrase (by me, i have a backend that communicates with GPT)

- Avatar to say GPT response

- Listening to user input, transcribe it (both by heyGen sdk, im only getting transcription from sdk)

- Ask GPT about response based on what user said (again me and my backend)

- Go 3 and repeat infinitely.

Problem that i have related to step 4. The problem with my expectation OR with a code i did, so i need someone's clarifications please.

Code below omits some steps of list above, this code should just start avatar and listen to user voice (expected to).

async startSession(videoElement: HTMLVideoElement): Promise<void> {

const token = await this.fetchAccessToken();

this._Avatar = new StreamingAvatar({ token });

this._Avatar.on(StreamingEvents.STREAM_READY, (x) => {

videoElement.autoplay = true;

videoElement.playsInline = true;

videoElement.muted = false;

videoElement.srcObject = this._Avatar.mediaStream;

});

const sessionData = await this._Avatar.createStartAvatar({

avatarName: 'Wayne_20240711',

quality: AvatarQuality.High,

language: "English",

});

await this._Avatar.startVoiceChat({ useSilencePrompt: false });

//await this._Avatar.startListening()

}

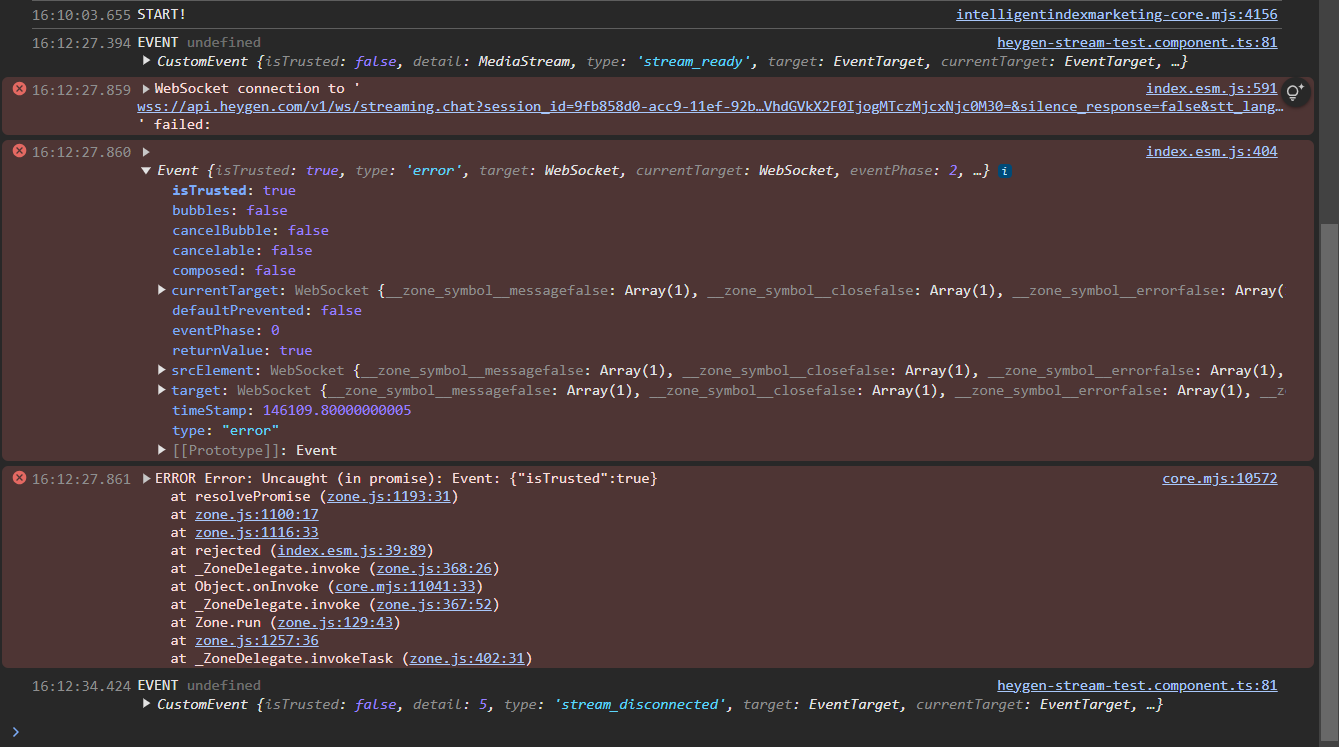

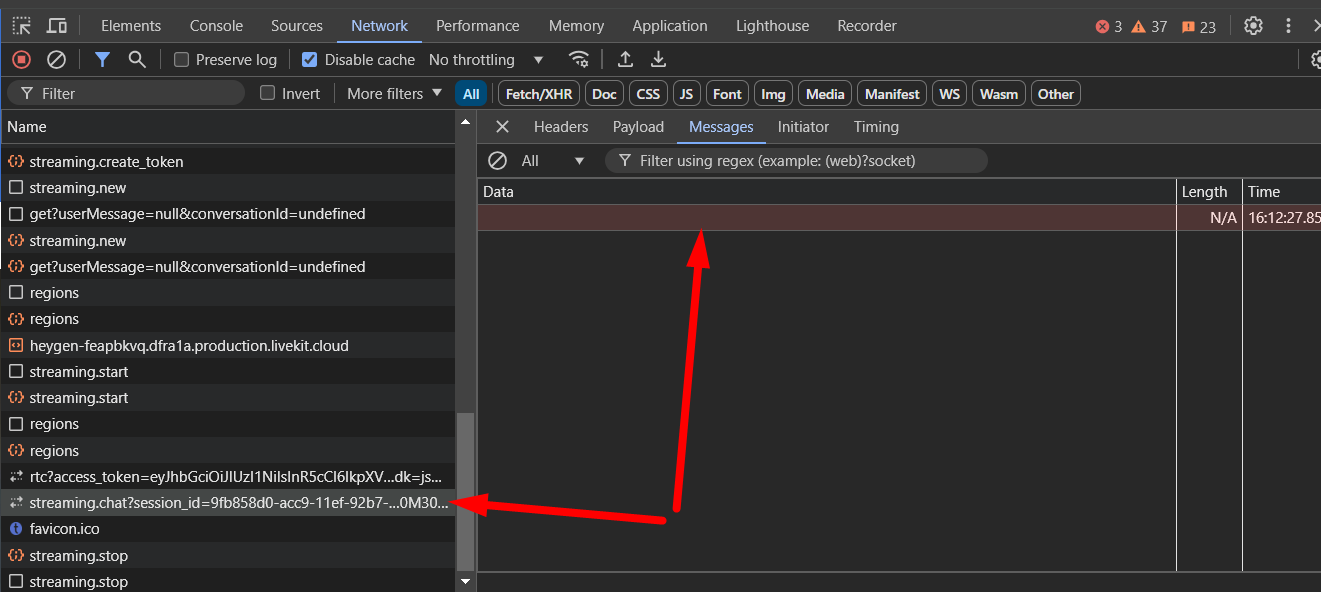

when execution reaches that line

await this._Avatar.startVoiceChat({ useSilencePrompt: false });

error appears in console, it related to fail of WS connection start

wss://api.heygen.com/v1/ws/streaming.chat?session_id=9fb858d0-acc9-11ef-92b7-ee31df050ca4&session_token=eyJ0b2tlbiI6ICIzN2Y5MTAyMWRlYzU0Nzc1YTk4NmE2Mjk3YTNjNjY0YiIsICJ0b2tlbl90eXBlIjogInNhX2Zyb21fcmVndWxhciIsICJjcmVhdGVkX2F0IjogMTczMjcxNjc0M30=&silence_response=false&stt_language=English

I expect that after fixing that issue (when WS connection start wont fail) - i will be able to recieve transcription via appropriate event of avatar object, like that

this.Avatar.on(StreamingEvents.USER_END_MESSAGE,(event:UserTalkingEndEvent)=>{

//event?.details

//event?.text

//event?.transcription

//event?.whatever

})

Tell me please if im wrong and where, and if so - how I would achieve desired behavior? I know about "knowledge id\base" approach with custom avatar, but as i understand from customer support explanation my approach and "knowledge" is 2 different but possible ways of making it work